- Features

- Pricing

Continuous integration and continuous deployment (CI/CD) explained

MCP serverDevOpsdeveloper workflowautomationdeployment

06 August 2025

Modern software development moves fast, but manual testing, building, and deployment processes don't. Continuous Integration/Continuous Deployment (CI/CD) automates these critical workflows, enabling teams to ship features quickly while maintaining quality and minimizing production issues. While CI focuses on automatically testing and building code changes, CD can mean either Continuous Delivery (preparing code for release) or Continuous Deployment (automatically releasing to production). Understanding this distinction is crucial for implementing the right strategy for your team.

This article will guide you through the fundamentals of CI/CD, share proven best practices, and explore advanced techniques that can transform your development workflow, including how modern platforms like Upsun can enhance your CI/CD pipeline with production-like preview environments.

What is continuous integration (CI)?

The CI in CI/CD stands for continuous integration, which involves the automatic running of tests and building of code on a remote server whenever changes are pushed. This process ensures that the latest commit in each branch passes all checks and can be safely released or merged into the main branch.

Common platforms for running CI include Jenkins, GitLab CI, and GitHub Actions. The examples in this article use GitLab CI.

The basic CI process includes code-style checking, running different types of tests, and building the project. On GitLab CI, such a pipeline would look like this (using the Node ecosystem for illustration):

# .gitlab-ci.yml

stages: # stages are executed in strict order of the list

- build

- test

- deploy

build-code: # name of the job

stage: build

script:

- npm install # install dependencies

- npm run build # build code

code-style:

stage: test

script:

- npm install # install dependencies

- npm run lint # check code style

unit-tests:

stage: test

script:

- npm install # install dependencies

- npm run test:unit # run unit tests

Automating the code-checking process saves time and improves release safety by detecting bugs early. However, this only scratches the surface of what CI can accomplish. In larger projects, CI pipelines often extend far beyond just three stages.

Building a CI pipeline for a complex codebase may include the following:

- Multistage complex build processes require multiple actions to be accomplished. For instance, a microservices architecture might involve independently building, testing, and packaging multiple services before deploying them together.

- Parallel and distributed job execution. A CI pipeline for a frontend and backend monorepo can run linting, unit tests, and code coverage checks for each component in parallel, reducing total build time.

- Incremental builds. In large projects, only rebuilding modules that have changed since the last commit can dramatically speed up iteration cycles, for example, in a gaming engine repository where assets and core logic evolve independently.

- Time and resource optimization of the processes. Utilizing job matrix configurations to optimize builds for different environments (for example, testing an API on Node.js versions 16, 18, and 20) while minimizing redundant workflows.

- Dependency management and dependency caching. Efficiently caching large dependencies, like Docker layers or npm packages, to avoid downloading them for every build, especially in environments with limited bandwidth.

- Integrating different testing frameworks. Ensuring seamless execution of Jest for unit tests, Cypress or Playwright for end-to-end testing, and Postman for API contract testing.

- Handling flaky tests. Implementing retry strategies when testing a real-time messaging system that may occasionally fail due to race conditions.

- Collecting and reporting metrics. Visualizing trends in build success rates, test coverage, and pipeline execution times via dashboards to quickly identify bottlenecks or regressions.

These are just some examples. A well-designed CI pipeline can be tailored to address specific project needs, from improving build performance to enhancing code quality and team collaboration.

What is continuous delivery (CD)?

Once the code has been built and tested in the CI stage, continuous delivery (CD) takes the build from the integration step and prepares it for release. This includes deploying the build to the staging or production environment, running another set of end-to-end tests, and uploading the binary to an internal registry.

The examples here use GitLab CI and the Node ecosystem, but the same logic can be implemented on any CI/CD platform, like Bitbucket Pipelines or GitHub Actions.

To extend the previous code sample with CD, you would add deploy stages to deploy the build to the staging or production environment:

stages:

- test

- build

- deploy-to-staging

- deploy-to-production

deploy-to-staging:

stage: deploy-to-staging

script:

- npm install # install dependencies

- npm run deploy:staging # deploy to staging

deploy-to-production:

stage: deploy-to-production

when: manual # this stage can be started only manually, if the developer wants to deploy the build to production

script:

- npm install # install dependencies

- npm run deploy:production # deploy to production

Basic automated deployments, like the deploy-to-staging step in the example above, are a good starting point for smaller projects or straightforward use cases. However, larger or more complex projects typically require additional techniques to enhance safety and control; you'll learn more about these later.

The deployment-to-production step in the example is designed to be manual instead of automated. The choice between automated and manual deployments depends on several factors, including the frequency of desired releases, test reliability, regulatory requirements, and management practices.

Using CD and automating delivery processes provides faster feedback on release quality, which supports lower-risk, more predictable releases.

What is continuous deployment?

Continuous deployment is a form of continuous delivery where any code change that passes all checks is automatically deployed to production. While continuous delivery emphasizes having every change ready for deployment, continuous deployment takes it one step further by removing the manual approval step altogether.

To implement continuous deployment using the pipeline from the previous section, remove the when: manual flag from the deploy-to-production stage. If you don't need to deploy to the staging environment (assuming you are confident in the CI tests as the primary safeguard for code quality), you can also omit the deploy-to-staging stage:

deploy-to-production:

stage: deploy-to-production

script:

- npm install # install dependencies

- npm run deploy:production # deploy to production

Continuous deployment offers the same benefits as continuous delivery: reduced manual effort, improved release consistency, and the ability to deliver high-quality updates continuously. However, it requires robust automated testing and monitoring, as code changes are deployed without manual review, increasing the risk of issues in production. It also demands a significant investment in infrastructure and processes to ensure reliability and enable quick rollbacks if needed.

Note: While both continuous delivery and continuous deployment use "CD" as an acronym, the subsequent part of this article will use "CD" to refer to continuous deployment.

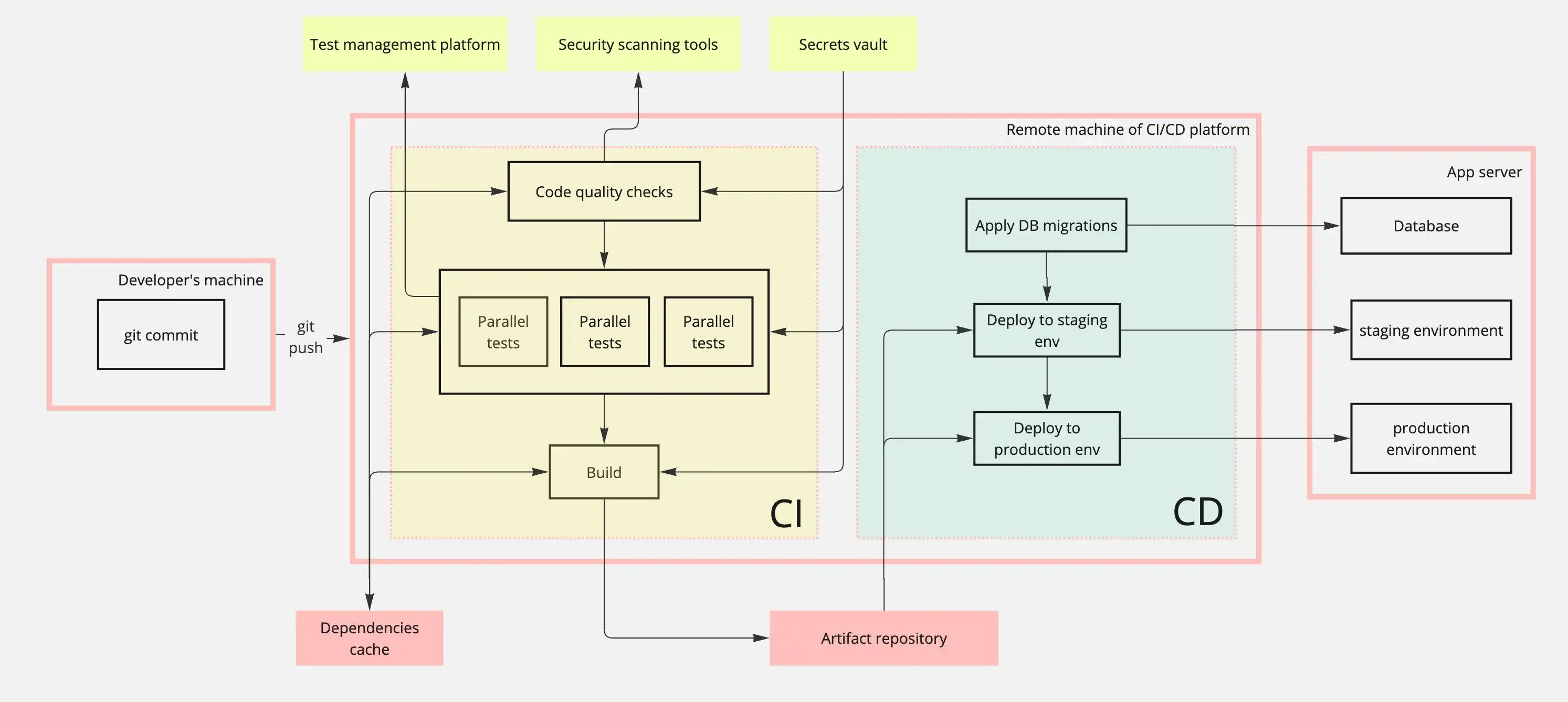

CI/CD pipeline architecture (putting together the complete picture)

Here's how continuous integration and deployment typically work in a DevOps pipeline:

- Code Push: The developer pushes code changes to their VCS repository, GitLab, GitHub, Bitbucket, etc.

- Build & Test: CI automatically runs tests and builds the code on the CI/CD platform

- Deploy: If the CI stage passes all checks, CD deploys the code to staging or production environments

- Live Application: The updated application is now live and accessible to users

As you can see, a complete pipeline involves many systems:

Behind the scenes, each code push triggers:

- Security scanning and quality checks run automatically

- Tests execute in parallel to minimize build time

- Sensitive data (API keys, credentials) is securely injected via secret management

- Dependencies are cached to speed up subsequent builds

- Database migrations apply automatically during deployment

The pipeline execution involves several parallel processes:

- Static security tools scan code changes for vulnerabilities.

- Tests run in parallel to speed up execution.

- Test results are sent to a test management platform.

- Once the final build artifact is generated, it's stored in the artifact repository and deployed to the appropriate environments.

This is just one example pipeline; additional components may be needed depending on your app's requirements.

Advanced CI/CD techniques

As mentioned, if you have a smaller project, a basic automated deployment, like the example used earlier, would be a good starting point. However, larger or more complex projects typically require additional techniques to enhance safety and control.

One approach is using feature flags to deploy code with features that are hidden from users until they're deemed stable, allowing for incremental releases and easy rollback if necessary. There are different types of feature flags, such as release toggles, which enable gradual feature rollouts, and experiment flags, used for A/B testing. For example, an e-commerce website might develop a new search filter but keep it hidden behind a feature flag until thorough testing is complete, so it can be activated instantly when ready.

You can also use "canary releases" and rollbacks to mitigate the risks of introducing bugs, performance issues, or unintended user impacts during new feature rollouts. A "canary release" involves deploying to a small segment of users first. You can then collect metrics and ensure the feature performs as expected. If issues arise, you can roll back the release for this small group, making the process faster and less risky. You can take things up a step by automating rollbacks based on the metrics you chose earlier. Automation also allows you to avoid manually handling database migrations when there are rollbacks. These techniques can help you roll out smooth, incremental deployments to your users.

Monitoring and feedback tools are essential for gaining a comprehensive view of your services, thus ensuring a safe rollout and enabling quick rollbacks if issues occur. Tools like Blackfire.io are valuable additions to the release process. Key metrics to monitor include error rates, request latency, deployment progress (especially for canary deployments), feature flag success and failure rates, and user engagement metrics. These insights help identify problems, such as increased errors or user abandonment, prompting rollbacks if necessary. Monitoring tools also enable configurable alerts and rollout-specific dashboards to track critical metrics, thereby improving observability and contributing to the safe and efficient release of updates.

Best practices for CI/CD implementation

There are also some best practices you can follow to improve workflows while enhancing the reliability and security of the CI/CD process.

Test automation

Test automation in CI/CD ensures changes are fully tested without manual intervention, saving time and reducing risk. Following the test pyramid, unit tests at the base, integration tests in the middle, and end-to-end (E2E) tests at the top, coverage and speed are balanced, ensuring issues are caught at the right level without overloading the pipeline.

Key elements, such as code coverage, mutation testing, and data management, further enhance CI/CD testing. Code coverage helps verify essential areas are tested, while mutation testing identifies weak spots by checking if tests catch intentional errors. Managing test data through mocks or snapshots enhances reliability and repeatability, thereby improving the overall quality of the software. For efficiency, running tests in parallel, selectively running impacted tests, and isolating environments can optimize test time and stability.

Optimizations

Optimizing CI/CD pipelines is crucial for minimizing the waiting time for developers and, therefore, deploying changes faster. Also, optimizing pipeline time is crucial for controlling costs, as most platforms charge based on usage time and resources consumed. Here are three examples of how you might optimize your pipeline:

- Caching dependencies. By storing and reusing previously downloaded libraries or modules, you can avoid redundant installations, saving both time and billable minutes during pipeline runs.

- Running jobs in parallel. This allows multiple checks to execute simultaneously, significantly cutting down overall build and test times. For example, a large set of end-to-end tests can be divided into smaller subsets and run in parallel, completing the process much faster.

- Optimize test suites. Run critical tests first to catch failures early and save time by not running further jobs if tests fail. One of the most effective optimization techniques is implementing a mechanism to run only tests affected by recent changes.

Other optimizations include optimizing Docker images and efficiently managing dependencies, both within the CI job and those used for the build.

Security

CI/CD processes have distinct security requirements if you want to ensure the pipeline and applications remain protected from vulnerabilities. This includes taking a multifaceted approach to identify vulnerabilities at different stages of development and deployment. Static Application Security Testing (SAST) scans source code or binaries to identify security vulnerabilities early in development. Dynamic Application Security Testing (DAST) analyzes running applications to detect issues like injection attacks and configuration flaws. Software Composition Analysis (SCA) examines third-party dependencies and libraries for known vulnerabilities and license compliance. These tools work together to secure applications throughout the development and deployment lifecycle.

The example pipeline above uses static security tools in the code quality checks step to check for vulnerabilities in the code. To implement dynamic security testing in a running app, you can set up an additional job that waits for the build to be generated, pushes it to a preview environment (possibly one hosted on Upsun), and then runs a DAST tool (like the OWASP Zap) to run security checks on it.

Sensitive data, like API keys, should be securely stored and injected into pipelines. You can use the built-in secrets management of your CI/CD platform to encrypt the stored data and safely inject it into the pipeline code. If you have advanced requirements, such as dynamic key generation or a flexible permissions system, you might want to use external services, like HashiCorp Vault or AWS Secrets Manager.

If your pipeline publishes any artifacts, like builds or test results, it's important to secure them from unauthorized access so that no one can access private information generated by your pipeline. You can also add a step for vulnerability scanning of artifacts to ensure that no sensitive data was accidentally injected and that it does not include potentially harmful code.

What’s next

This article explored the core concepts of CI/CD, best practices for implementation, and advanced techniques that can transform your release process. The key benefits of CI/CD include faster time-to-market, improved reliability, reduced manual errors, and the ability to scale development workflows for complex projects.

But here's the challenge: Even with solid CI/CD pipelines, testing in production-like environments remains a bottleneck. Most teams struggle with environment inconsistencies, limited staging resources, and the inability to test with real data safely.

This is where Upsun enhances your existing CI/CD workflows. Rather than replacing your CI/CD platform, Upsun integrates seamlessly with GitHub Actions, GitLab CI, Jenkins, and other tools to solve the deployment and testing challenges that pipelines alone can't address.

Instead of managing complex deployment infrastructure, your CI/CD pipeline can focus on what it does best, building and testing code, while Upsun handles the deployment, environment provisioning, and production-grade hosting.

Ready to enhance your CI/CD pipeline? Start your free Upsun trial and experience seamless deployments with production-like testing environments that integrate with your existing workflows.

Your greatest work

is just on the horizon

CompareVercel alternativeAmazee alternativeHeroku alternativePantheon alternativeManaged hosting alternativeFly.io alternativeRender alternativeAWS alternativeAcquia alternativeDigitalOcean alternative

ProductOverviewSupport and servicesPreview environmentsMulti-cloud and edgeGit-driven automationObservability and profilingSecurity and complianceScaling and performanceBackups and data recoveryTeam and access managementCLI, console, and APIIntegrations and webhooksPricingPricing calculator

Use casesApplication modernizationeCommerce and CMS hostingMulti site managementCompliance and governanceDevOps and platform engineeringAI and automation

Join our monthly newsletter

Compliant and validated