- Features

- Pricing

Introduction to message queues and their use cases

cloud application platformPaaSdeveloper workflowDevOps

06 August 2025

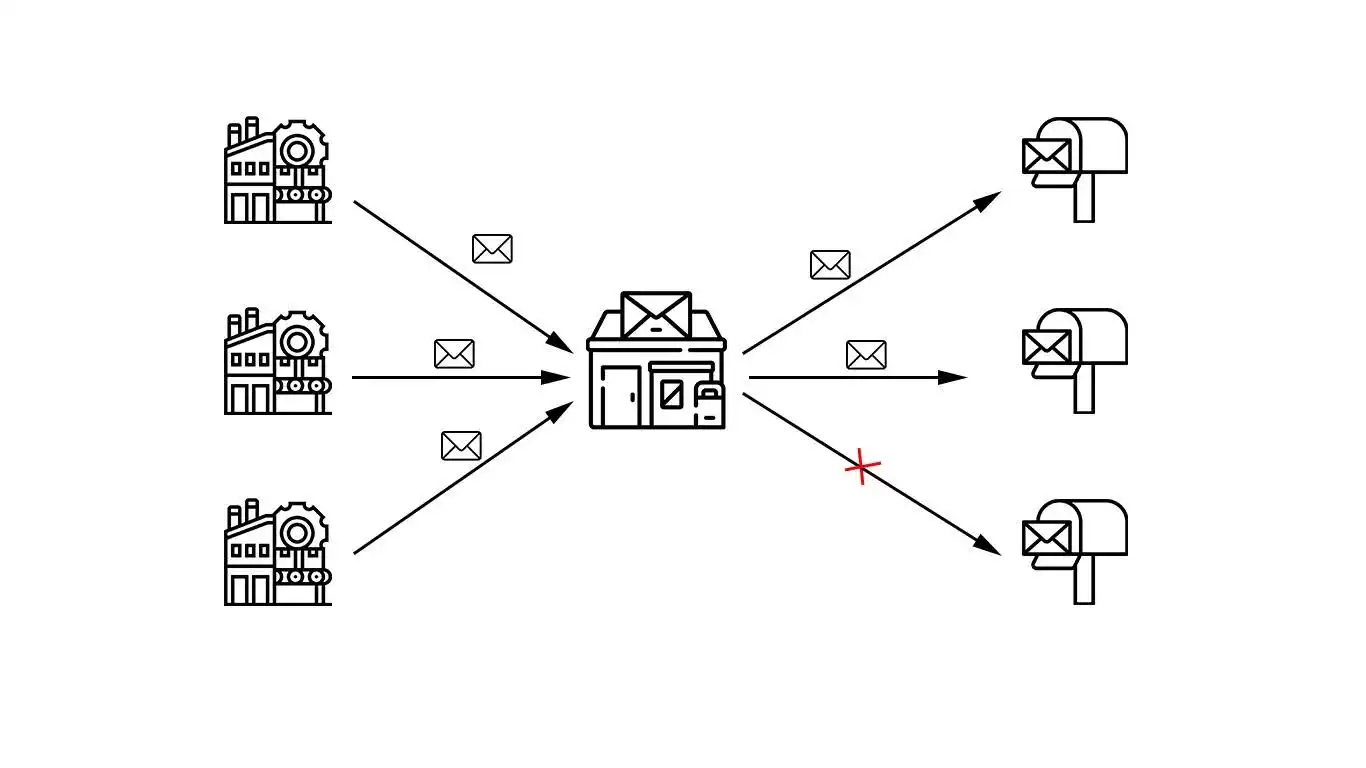

Due to the rising complexity of many organizations' digital stacks, message queues have become a vital component of their software architectures. Instead of maintaining point-to-point integrations of all components, message queues enable asynchronous communication between an architecture's different parts.

Architectures in which a message queue is a central component are often referred to as decoupled systems. Even when the consuming system is temporarily down, the messages created by the producing systems are stored in the queue. This makes these systems much less prone to failure. Furthermore, scaling an architecture is less troublesome because all it requires is an upgrade of the message queue and the relevant producer or consumer. There are no bottlenecks or weakest links.

In this article, you'll learn about the ins and outs of message queues, the most popular technologies, and various use cases to get started.

What is a message queue?

In essence, a message queue is like a mailbox. Someone (the publisher) posts a letter (the message) in a mailbox (the message queue), which is then picked up by the receiver (the subscriber). However, there is one difference: in a message queue, multiple consumers can receive the message.

The sender and receiver don't need to be active at the same time. The queue is like a buffer; it stores messages until the consumer is ready to receive them.

Publisher

The publisher (or producer) creates and sends messages. This can be anything—a server producing logs, an application sending data to a database, and even a cloud storage bucket sending CSV files row by row. In a decoupled system, the publisher operates independently, without concern for who will consume the messages.

Message

The message contains the actual data being transmitted from publisher to subscriber. It can be anything, from a simple text string or a JSON object to an XML document or even serialized code. A message usually contains headers with metadata. It provides context and instructions about the message itself, for example, the sender ID, timestamp, priority, or in some systems, information about the intended recipient.

Message queue

The message queue stores messages temporarily and is responsible for delivering the message to one or multiple subscribers. Some message queues support maintaining a specific message order, like First-In-First-Out. However, ordered delivery may involve some trade-offs, like complexity and throughput. For example, if one specific message is delayed while waiting to be consumed, it can block all subsequent messages in the queue, causing the queue to grow rapidly.

Subscriber

The subscriber (or consumer) listens to the queue and takes (relevant) messages to process them. Like a publisher, a subscriber can be anything, another application, a service to update a database, or any other component that needs the information.

Topic

Some message queues include an exchange or broker system, a routing system that acts as a traffic controller for messages. The exchange decides which queue or subscriber should receive a message based on predefined rules. One common type of exchange is a topic exchange. This approach involves tagging messages with a routing key, a string separated by periods. Subscribers or queues use binding keys to filter and subscribe to messages that match specific routing patterns.

Here's an example from a sports website:

A publisher sends the final scores of sports matches from all sports and all leagues around the world with routing keys like "cricket.india.ipl" and "soccer.uk.premierleague". A topic uses the binding key "soccer" to contain all the messages about soccer, which subscribers can consume to receive all soccer results from around the world.

Acknowledgment

Some systems support acknowledgment (or "ack") of messages to ensure reliable message delivery. It's essentially a signal from the consumer to the message queue that a message has been successfully received and consumed. This ensures delivery guarantee (prevention of message loss) and prevents duplicate processing of messages. There are various delivery guarantee strategies:

- At-most-once. No guarantee of delivery.

- At-least-once. Messages are delivered at least once, but could also be duplicated.

- Exactly-once. Messages are delivered exactly once. This is the most complex system to implement because you need to configure a separate topic for each link between the two systems.

Messaging patterns

Messaging systems follow a range of communication patterns, with each pattern supporting different kinds of use cases.

- One-to-one. Messages are delivered over a single topic by a single publisher to a single predefined subscriber.

- One-to-many (fan out). Messages are delivered over a topic by a single publisher to multiple consumers. Ensuring that at least one consumer reads the message can be achieved with an at-least-once delivery guarantee strategy. However, ensuring that all subscribers receive and process the message (atomicity) requires some extra steps known as the two-phase (2PC) pattern or a saga pattern.

- Many-to-one (fan in). In a many-to-one pattern, messages are delivered over a topic by multiple publishers to a single consumer. This pattern is typically used when multiple systems produce streams of data, like logs or database changes, which are collected and stored in a single system.

- Many-to-many (load balanced). Finally, in a many-to-many pattern, messages are delivered over a topic by multiple publishers to multiple consumers. Like with the one-to-many pattern, ensuring atomicity requires some extra engineering.

Popular message queue technologies

Before moving on to real-world use cases, let's discuss some popular message queueing technologies.

RabbitMQ

RabbitMQ is a mature product and is widely used within IoT environments. Even though it only takes a couple of minutes to set up, it is known for its very low latency. Furthermore, it supports complex routing use cases. RabbitMQ is open source, and although it's written in Erlang, it is supported by most programming languages.

ActiveMQ

Like RabbitMQ, ActiveMQ is a mature and widely used product. It is built entirely in Java and excels in Java-based enterprise environments. While it doesn't meet RabbitMQ's low latency and is not as advanced in terms of routing features, it's more suited for high-throughput cases given its horizontal scalability features. It's also open source and supported by many programming languages.

Apache Kafka

Kafka is a modern queuing system with real-time processing and data manipulation. It has risen to prominence in the past couple of years as the go-to solution for streaming applications. Because of its distributed architecture, it has low latency, supports high throughput, and is fault-tolerant. The downside is that it is harder to set up and more expensive to maintain due to its distributed nature. While Kafka is open source, many providers are offering it as a service, including its original developer at Confluent.

Message queues as a service: Amazon SQS, Azure Service Bus, and GCP Pub/Sub

This brings us to the managed services. Each of the three largest cloud service providers has its own message queue technology. What they all have in common is that they integrate flawlessly with dozens of other cloud services from their respective provider. However, they all excel at one specific aspect. Azure's Service Bus is feature-rich and supports complex use cases. Amazon SQS is cost-effective. It doesn't excel at speed and throughout, nor in terms of features, but it gives the best bang for the buck. Finally, GCP Pub/Sub has the lowest latency and highest throughput, but its pricing model is complex and might result in unexpected costs.

| Feature | RabbitMQ | ActiveMQ | Apache Kafka | Managed |

|---|---|---|---|---|

| Latency | Low | Medium | Low | Vendor-specific |

| Throughtput | High | Very High | Low | Vendor-specific |

| Routing | High complexity | Medium complexity | High complexity | Vendor-specific |

| Config & Maintenance | Simple | Simpe | Complex | None |

| License | Open source | Open source | Open source | Proprietary |

Message queues in action

Now that we have established an overview of message queues and their features, it's time to outline a variety of use cases from different sectors.

Use case 1: Payment processing

Payment processing systems, such as Stripe, utilize a message queue to handle asynchronous communication between different components of the payment processing pipeline. When a customer initiates a payment, the web application publishes a "payment_request" message to the queue. This message would contain essential details like the customer ID, order ID, amount, and payment method.

A suitable routing key for this scenario could be "payment.process", allowing for filtering and prioritization if needed. Since multiple services might need to react to a successful payment (for example, inventory, shipping, and accounting), a one-to-many messaging pattern is appropriate, perhaps with a broker, to ensure data consistency. A saga pattern can roll back any changes in case of failures in downstream services. Finally, the system requires an "at-least-once" delivery guarantee to ensure that no payment requests are lost, even in case of temporary failures.

Use case 2: Mobile multiplayer games

In mobile games, each game server typically publishes in-game events—such as player actions, game state updates, and chat messages—to the message queue using appropriate routing keys like "game.{game_id}.event" or "player.{player_id}.action"

Here's how that would work:

- When players participate in an online event, like raiding a dungeon, they are subscribing to the events for that particular dungeon instance.

- Anytime players interact, from encountering each other on the same map or sending each other text messages to engaging in combat, they are subscribing to each other's events.

- A "notification_service" would subscribe to relevant topics to gather events that trigger notifications, like achieving a high score, receiving a friend request, or starting a new match. This service would then generate and deliver notifications to players' devices through a push notification service.

For this scenario, an "at-least-once" delivery guarantee is crucial to ensure that critical game events and notifications are not lost. The messaging pattern would be many-to-many as multiple players interact with each other and the game server. In gaming, latency matters a lot, so a 2PC pattern isn't suitable. A saga pattern, however, can ensure data consistency across different game services.

Use case 3: Load balancing

A load balancer would initially receive all incoming requests. Instead of directly forwarding them to backend servers, the load balancer would publish each request as a message to the message queue. Multiple worker instances would subscribe to this queue, consuming messages and processing the requests. The message queue effectively distributes the workload among the available workers, ensuring no single server is overwhelmed. This approach also provides resilience; if a worker fails, the message remains in the queue for another worker to pick up.

An appropriate routing key for this scenario could be "request.process," allowing for potential prioritization or filtering of requests in the future. An "at-least-once" delivery guarantee is necessary to ensure that no request is lost due to worker failures. Clearly, the messaging pattern here is one-to-many, as a single request message is processed by one of many available workers.

Use case 4: Data streaming

Assume you want to track all database changes: A Debezium connector is deployed alongside a database to capture its changes. These changes are transformed into events and published to a message queue. The message queue acts as a central hub for distributing these change events to various consumers. A streaming platform, like Kafka, can be used to transform the events in real time and load them into the data warehouse for real-time analytics.

A suitable routing key strategy could be "database.{db_name}.{table_name}.{operation}", allowing subscribers to filter events based on the specific database, table, or operation type (create, update, delete). An "at-least-once" delivery guarantee is important to ensure that no database changes are missed by the data warehouse. The messaging pattern here is typically one-to-many, as a single database change event can be relevant to multiple consumers besides the data warehouse.

How Upsun Simplifies Message Queue Management

Setting up and running message queues shouldn't require a dedicated DevOps team. Upsun transforms complex message queue operations into simple, developer-friendly workflows that let you focus on building features instead of managing infrastructure.

Deploy in minutes

Traditional message queue setup involves dozens of configuration steps, security hardening, and extensive testing. You'll spend days installing software, configuring user permissions, setting up SSL certificates, establishing clustering for high availability, configuring monitoring and alerting, setting up automated backups, and performing security hardening.

With Upsun, you simply define your requirements in a configuration file, and we handle everything else. Within minutes, you have a production-ready message queue with automatic clustering, SSL encryption, monitoring, backups, and security patches already configured.

Test with real production data

The biggest challenge with message queues is that local development environments can't replicate production behavior. Messages that work perfectly on your laptop can fail spectacularly with real data volumes and patterns.

Upsun solves this with perfect environment cloning. You can instantly create a complete copy of your production environment, including exact queue configurations, real message patterns and volumes, same performance characteristics, and production-like data that can be optionally anonymized.

Example: An e-commerce company needed to test a new order processing system during their busy season. Instead of guessing how it would perform, they cloned their production environment with real Black Friday message volumes. They discovered and fixed three performance bottlenecks before deployment, avoiding potential downtime during their highest revenue day.

Smart monitoring

Most monitoring tools show you that something is wrong, but not what to do about it. Traditional monitoring might tell you "Queue depth: 10,000 messages" or "Consumer lag: 5 minutes" without explaining what this means or how to fix it.

Upsun's integrated observability provides actionable insights. Instead of cryptic metrics, you get clear problem descriptions like "Payment messages are being processed out of order" with suggested fixes like "Enable single-active-consumer mode" and one-click resolution options.

Automatic scaling

Message queue traffic is unpredictable. A viral social media post or flash sale can instantly overwhelm your system. Upsun automatically scales your message queues based on real-time demand.

During normal traffic, your queues run on minimal resources to keep costs low. When traffic spikes hit, the system instantly scales up to handle the load. Once the spike ends, resources automatically scale back down. You're charged only for what you use.

Integration with your development workflow

Upsun message queues work seamlessly with your existing tools and processes.

Every change to your message queue setup goes through your normal code review process using git-based configuration, eliminating "configuration drift" between environments. Queue connection details are automatically injected into your applications as environment variables, removing the need for hardcoded credentials or manual configuration updates.

You can view your application metrics, database performance, and message queue health in one unified dashboard, eliminating the need to juggle between multiple monitoring tools.

Conclusion

Message queues have become the backbone of modern, scalable applications—but implementing and managing them shouldn't slow down your development process. While the concepts are straightforward, the operational complexity of running message queues in production can quickly become overwhelming.

Managing delivery guarantees, durability, persistence, and ordering across different message queue technologies is complex and prone to error. Each broker has its own configuration nuances, monitoring requirements, and failure modes that require specialized expertise to handle properly.

This is where Upsun comes in. As a developer-focused cloud application platform, Upsun takes the operational burden off your shoulders by providing pre-configured, battle-tested setups for both RabbitMQ and Kafka that handle durability, persistence, and ordering concerns out of the box. Whether you need RabbitMQ for complex routing scenarios or Kafka for high-throughput streaming, Upsun provides fully managed services with automatic scaling, monitoring, and maintenance.

Upsun integrated monitoring goes beyond basic metric, it shows you exactly when messages are being processed out of order or when durability guarantees are being violated, before your customers notice. With Upsun's git-based workflow and instant environment cloning, you can test your message queue configurations with production-like data before deployment, eliminating the guesswork that often leads to production issues.

Ready to implement message queues without the operational headaches? Start building with Upsun today and focus on what matters most: your application logic, not infrastructure management.

Your greatest work

is just on the horizon

CompareVercel alternativeAmazee alternativeHeroku alternativePantheon alternativeManaged hosting alternativeFly.io alternativeRender alternativeAWS alternativeAcquia alternativeDigitalOcean alternative

ProductOverviewSupport and servicesPreview environmentsMulti-cloud and edgeGit-driven automationObservability and profilingSecurity and complianceScaling and performanceBackups and data recoveryTeam and access managementCLI, console, and APIIntegrations and webhooksPricingPricing calculator

Use casesApplication modernizationeCommerce and CMS hostingMulti site managementCompliance and governanceDevOps and platform engineeringAI and automation

Join our monthly newsletter

Compliant and validated